Apache Flume Environment Setup

The below are the system requirements to install the apache Flume on the system.

System Requirements -

- Java Runtime Environment - Java 1.6 or later (Java 1.7 Recommended)

- Memory - Sufficient memory for configurations used by sources, channels or sinks

- Disk Space - Sufficient disk space for configurations used by channels or sinks

- Directory Permissions - Read/Write permissions for directories used by agent

Another requirement is, install Hadoop before proceeding with Flume installation. The Hadoop installation procedure can be found here .

Installing the Flume Tarball -

The Flume tarball is a self-contained package that had everything required to use Flume on a Unix-like system. Follow the below steps to setup Flume.

Step1:

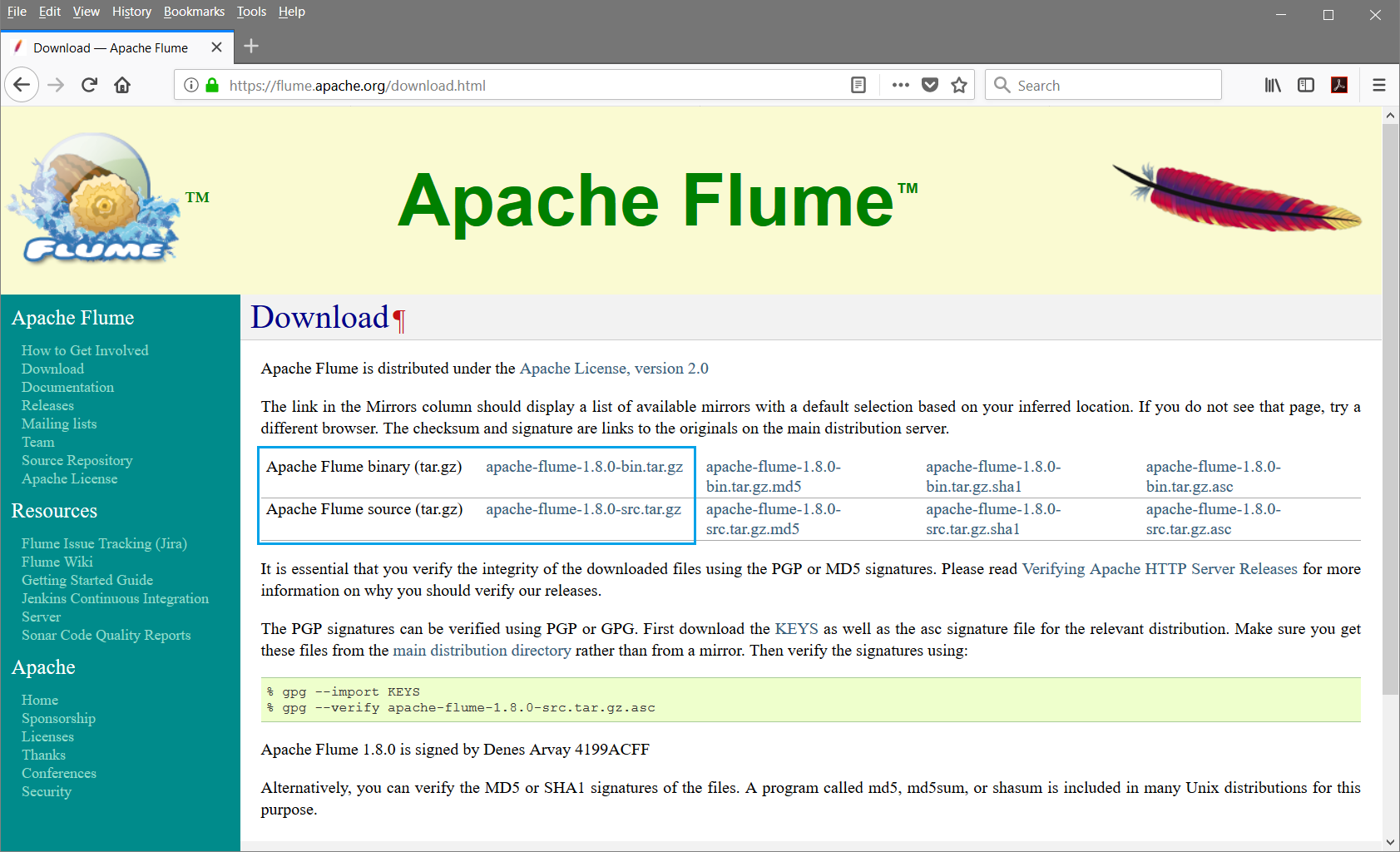

Download Flume Tarball from the link:

https://flume.apache.org/download.html

Download both Binary and Source files from the above link.

Step2:

Click on apache-flume-1.11.0-bin.tar.gz and it will redirect it to mirror sites to download. Once the Binary download completed, click on apache-flume-1.11.0-src.tar.gz and it also will redirect to mirror sites to download.

Step3:

Create a directory for the flume. The directory will be created where the Hadoop and Habse installed. The directory can be created with the below command if it is not created.

$ mkdir Flume

Step4:

Unpack the flume in the downloaded directory.

$ cd Downloads/

$ sudo tar -zxvf apache-flume-1.11.0-bin.tar.gz

$ sudo tar -zxvf apache-flume-1.11.0-src.tar.gz

Step5:

Move to the unpacked ones to Flume directory created.

$ sudo mv apache-flume-1.11.0-bin.tar/* /home/Hadoop/Flume/

Flume Configuration -

Three files needs to be modified to configure Flume.

.bashrc

To complete the configuration of a tarball installation, the PATH variable must set to include the bin/ subdirectory of the directory where Flume installed.

$ export PATH=/usr/local/lib/flume-ng/bin:$PATH

Flume.Conf

Flume provides a template for the configuration files for flume.conf called conf/flume-conf.properties.template and a template for flume-env.sh called conf/flume-env.sh.template.

Copy the Flume template property file conf/flume-conf.properties.template to conf/flume-conf

$ sudo cp conf/flume-conf.properties.template conf/flume.conf

Edit the flume.conf to rename the flume-conf.properties.template file as flume-conf.properties and flume-env.sh.template as flume-env.sh

Flume-env.sh

copy the template flume-env.sh file conf/flume-env.sh.template to conf/flume-env.sh.

$ sudo cp conf/flume-env.sh.template conf/flume-env.sh

Edit the flume-env.sh file and set the JAVA_Home to the folder where Java was installed in your system.

Export JAVA_HOME=/home/Hadoop/java

Verifying the Installation -

Verify the installation by using the below command

$ flume-ng help

The command returns the similar to below.

Usage:

/usr/bin/flume-ng <command> [options]...commands: help display this help text agent run a Flume agent avro-client run an avro Flume client version show Flume version info global options: --conf,-c <conf> use configs in <conf> directory --classpath,-C <cp> append to the classpath --dryrun,-d do not actually start Flume, just print the command --Dproperty=value sets a JDK system property value agent options: --conf-file,-f <file> specify a config file (required) --name,-n <name> the name of this agent (required) --help,-h display help text avro-client options: --rpcProps,-P <file> RPC client properties file with server connection params --host,-H <host> hostname to which events will be sent (required) --port,-p <port> port of the avro source (required) --dirname <dir> directory to stream to avro source --filename,-F <file> text file to stream to avro source [default: std input] --headerFile,-R <file> headerFile containing headers as key/value pairs on each new line --help,-h display help text

Either --rpcProps or both --host and --port must be specified.

Note that the Flume-ng command should be in the $PATH where the flume installed.