Apache Flume Introduction

What is Apache Flume?

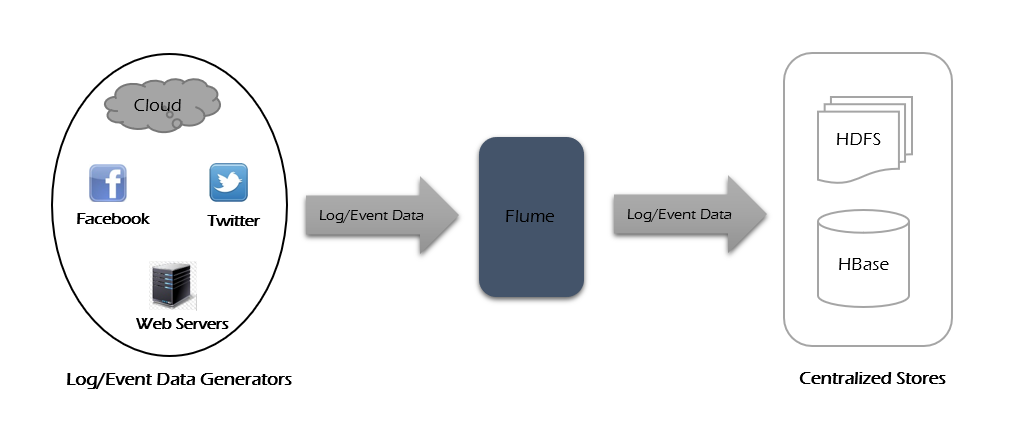

Apache Flume is an open-source tool created to handle huge amounts of streaming data—like logs or events—and safely transport them into a centralized storage system (such as HDFS or HBase).

It’s built to be reliable, meaning it ensures data isn't lost, and it is distributed, so it can spread work across multiple machines.

Flume was originally designed for copying log data from web servers directly into Hadoop storage, which automates a lot of boring data movement.

Usage of Flume

Apache Flume is collection of 6 important components and the components are.

- E-commerce insights: Online shops can use Flume to gather user logs by region and send them straight to Hadoop for analysis—helping businesses learn more about customer behavior.

- Fast log ingestion: It speeds up moving application server logs into HDFS, saving you from slow, manual, or batch uploads.

- Event streaming: Beyond logs, Flume handles streams of events from systems or social platforms smoothly—all the data goes into your central store.

- Broad use cases: Whenever you need to stream large volumes of structured or semi-structured data into something like Hadoop quickly and safely, Flume is a perfect fit.

Flume Advantages

Here are some key advantages of using Flume:

- Flexible storage options: You can send data to any centralized destination—HDFS, HBase, and more—without being tied down.

- Smooth data flow: When incoming data outpaces writing speed, Flume buffers it, avoiding overloads and ensuring steady flow.

- Smart routing: Contextual routing helps direct data to different sinks based on its content or rules you set.

- Reliable delivery: Flume uses a two-transaction model per message (one to get it in, one to send it out), so data is guaranteed to be delivered.

- Tough and scalable: It's reliable, fault-tolerant, scalable, manageable, and highly customizable

Flume Features?

Some notable features of Flume include:

- Multi-server log ingestion: Flume can pull logs from many web servers and aggregate them into a single storage system like HDFS or HBase.

- Real-time streaming support: It moves data as it's generated—no waiting for batches or manual uploads.

- Large event volume handling: It doesn’t just handle logs—it can ingest social media events or metrics from platforms like Amazon or Twitter.

- Flexible connectors: Supports a huge range of sources and sinks, making it easy to plug Flume into most data systems.

- Advanced flow patterns: You can set up multi-hop pipelines, fan-in/out, and use contextual routing to manage complex data flows.

- Horizontal scalability: When your data grows, you just add more Flume agents—it's that simple.